Here we explain how sample rate conversion works. As an essential prerequisite, you must understand the principals of sampling. Even if you understand sampling already, read our explanation of the process here. The viewpoint and terms used there are mirrored here, and are key to this explanation. Read it now—take a quick look at to refresh your memory if you’ve read it before—then come back here for the rest.

Upsampling

Sometimes we need to change the sample rate after the fact. We might need to match converter hardware running at a different rate, or we might want to increase the frequency headroom; for instance, frequency modulation, pitch shift, and distortion algorithms can add higher frequency components that might otherwise alias into the passband if we don’t raise the ceiling.

Let’s consider the common case of increasing the sample rate by a factor of two—maybe we have some music recorded at 48 kHz, and want to combine it with tracks recorded at 96 kHz, with 96 kHz playback. This means we need to generate a new sample between each existing sample. One way might be to offset the new sample by half the difference of the two existing samples surrounding it. This is linear interpolation—essentially a poor low-pass filter, and the results aren’t very good, leaving us with a reduced frequency response and aliasing. There are better ways to interpolate the new sample points, but there is one that is essentially perfect, creating no new frequency components, and not changing the frequency response. We can do this because the original signal was band-limited to begin with, so we know the characteristics of what lies between the sampled points—it’s essentially the shape dictated by the original low pass filter we used in the sampling process. By reversing the process, we can get the original band-limited signal back, and resample it at twice the original rate—having lost nothing.

We don’t need to know the exact characteristics of the original low-pass—it was certainly one that was of high enough quality to get the job done (or we’d already been suffering from the resulting aliasing), so we just use another. The obvious approach is to convert back to analog, letting the low pass filter reconstruct the continuous signal, then re-sample it at twice the original rate.

Converting to analog and back to digital seems a waste—we’re already digital and would like to avoid running through hardware—and filters can be done mathematically in the digital domain. Looking at the diagram, we see that we don’t need two filters. The one with the higher cut-off frequency doesn’t do anything interesting, since the other filter removes those frequencies anyway, so we discard it and keep the lower filter.

The filter is a linear process, and we suspect that we can move it to one side, putting the D/A and A/D components next to each other, where they cancel except for the change in sample rate. Then, conceptually, we just need to double the number of samples to upsample by a factor of two, and low-pass filter. But what does that mean—should we simply repeat each sample? Average the surrounding samples? And does it make a difference whether we filter before or after? We suspect that we need to filter after the rate change, since running a full-band low-pass filter doesn’t really do anything.

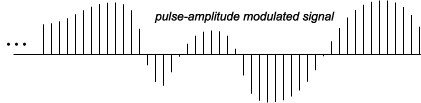

We can see how to double the samples and what the filter does by looking back at how we sampled the signal in the first place. Recall that the existing samples represent the pulse-amplitude modulated result of the analog to digital conversion stage. Let’s look at that signal again:

With that in mind, the in-between samples are obvious—they are all the value zero. The result of inserting a zero between each of our existing samples is that the signal we have doesn’t change at all—and therefore neither does the spectrum—but the sample rate does. Here’s our spectrum again, before inserting zeros:

Here’s what we have after inserting a zero between each sample:

Essentially, we’ve doubled the number of samples, doubling our available bandwidth, but in doing so we’ve revealed an aliased image in the widened passband. Now it’s apparent why we need the low-pass filter—to remove the alias from the passband:

That’s it—to double the sample rate, we insert a zero between each sample, and low-pass filter to clear the extended part of the audio band. Any low-pass filter will do, as long as you pick one steep enough to get the job done, removing the aliased copy without removing much of the existing signal band. Most often, a linear phase FIR filter is used—performance is good at the relatively high cut-off frequency, phase is maintained, and we have good control over its characteristics.

Downsampling

The process of reducing the sample rate—downsampling—is similar, except we low-pass filter first, to reduce the bandwidth, then discard samples. The filter stage is essential, since the signal will alias if we try to fit it into a narrower band without removing the portion that can’t be encoded at the lower rate. So, we set the filter cut-off to half the new, lower sample rate, then simply discard every other sample for a 2:1 downsample ratio. (Yes, the result will be slightly different depending on whether you discard the odd samples or even ones. And no, it doesn’t matter, just as the exact sampling phase didn’t matter when you converted from analog to digital in the first place.)

Conclusion

We’ve gone through a lot to explain this, but if you understand the reasons, it’s easy to see what to do in other situation. For instance, if we need to upsample by a factor of four, we look at our pulse-amplitude modulated signal and note that there is nothing but a zero level between our existing impulses, so we insert three zeros between our existing samples. Then low-pass filter the result, with the frequency cut-off set to include our original frequency band and block everything above it to remove the aliased copies from the new passband.

There are some added wrinkles. For larger conversion ratios, we do the conversion in multiple smaller steps and exploit some optimizations with less computation than doing a single large ratio conversion. For non-integer ratios, such as conversion from 44.1 kHz to 48 kHz, most text books suggest a combination of upsampling and downsample as a ratio of integers (upsampling by a factor of 160 and downsampling by a factor of 147 in this case), but it can also be done in a single step, fractionally.

Hi Nigel,

first of thanks for these articles. Just found your website today.

I found your website because I am in need for a upsampler/downsampler. This article is a great intro into upsampling and downsampling. In my situation I implement a saturation algo in a vst plugin but it produces alot of aliasing. Now I am looking for a fix.

Would it be possible to show some C code on how this concept is implemented especially the filtering ? Since this is realtime speed would be important.

thanks so much in advance,

Michael

Hi Michael,

I’m glad you enjoyed the articles. While I’d like to say i’ll get to your request soon, the fact is that I have a number of things already in the queue that I’m having trouble finding time for, including articles that have been “almost done” for months. But there are other sources for completed and tested multi-platform sample rate conversion libraries, such as libsamplerate. And this page has links to a few other libraries as well: Free Resampling Software. (I haven’t used any of these.)

Nigel

Hi Nigel.

Can I ask what do you mean with “images of the signal?”.

Is it the signal introduced by adding zero-value samples? (Which happens only when you add them?)

Because for a given signal, play at different samples rate doesn’t introduce signal as “images”.

Not sure I follow the concept 🙂

Good question, and very important. Sampling is a modulation that creates images of the audio band. See Sampling theory—Part 3, and the rest of the series if you haven’t read it. Anything you do to the audio band is reflected in the images. The images will get removed by the DAC filter, but if you do non-linear processing in the digital domain, you need to be aware of how the images can reflect down into the audio band (aliasing). Here’s an example application: Amp simulation oversampling.

Yep, I’ve of course read the whole about this topics.

So I’ve done a test.

I generate a 2000hz sine wave at 44.1khz samplerate, within my synth code.

I than code it to insert a single zero-sample between the actual signal, and looks at spectrum with SPAN.

I can clearly see what happens: a 1khz sine (since the sr is doubled, halving the freq) plus a 21050 sine (22050-1000hz) – i.e. mirroring the images.

So yes, the image is revealed.

But now if I take two separate sine wave, one at 1000hz and one at 21050 and play them together (normally, without any padding, using a usual synth), I would expect the same signal with “zero-padding” in between (simulating what happening before).

But in fact the signal its totally different, can’t see any valuable zero-samples on the resulting shape.

Does also the phase involved in this mirroring/imges artifacts?

Yes, that would be due to the phase relationship.

Oh wow! Really? Aliasing mess up the phase of the mirrored signal? Didn’t know this. Any article about this? About how much it move it? Can be evaluated?

OK, now I don’t know if I answered your question…I thought you were asking why generating the two sine waves wouldn’t give you a time series that had alternating zeros…

Yes exactly. But I think you confirm because the alias image will have different phase, right? So play two sine with the same phase won’t match until I’ll also match the phase drift introduced by the aliasing, correct?

Right. I’m not sure what you mean by “phase drift introduced by the aliasing”, though.

I mean…

I thought that if a sine will be mirrored by aliasing, it should be the same signal, with the exact phase/Sync. Such as playing the same signal twice from a synth (i.e. double the level). But it seems is not what happening: the mirror sine will mix with different phase, creating a different shape basically (such as playing two same hz sine on a synth, and change (i.e. drift) the phase of the second one).

More clear now?

Sorry, I don’t understand what it is you’re trying to accomplish, and I think it would take an example with diagrams and is probably beyond the scope of the comments section. 🙂

I just realize this phase shift side effect 🙂

Is the phase shift of aliased signal measurable? Or its aleatory?

All harmonics of the digitized signal are modulated by all harmonics of the sampling signal (an impulse train). That would start with the “zeroth” harmonic (DC), which results in the original signal, then the first (a cosine at the sample rate), second (twice the SR), and so on. In this case we probably only care about DC (to keep the signal) and the first. So, it depends on the phases of the harmonics of the original signal—the phases of the sampling harmonics are fixed, a series of cosines relative to the sample period. Since there is no reason to expect the harmonics of the signal to be a multiple of the sampling period, I’d have to ask, phase relative to what? For instance, if you’re sampling a 1 Hz sine at 44.1 kHz, the first image is at 44099 Hz. Not just any 44099 Hz sine, it has a definite phase dictated by the modulation and the original signal’s phase relative to the sample times. But there is no “phase shift”, just a resulting phase.

Exactly.

But the phase of original 1hz signal compared to the one mirrored is different (this is where I said phas shift).

I mean, they don’t sum doubling the level of the signal, such as sin(x) + sin(x); the result will be sin(x) + sin(d*x), where d is phase shift compared to sin(x) of the mirror image.

Correct? If its correct, how its that d? Can be calculated? How?

Maybe this will help. The first image (or alias) of a sinusoid is from that sinusoid amplitude modulated by the sample rate. cos(a) * cos(b) = (cos(a+b) + cos(a-b)) / 2, so the first (lowest) would be cos(a-b), where b is the frequency and phase of the sinusoid, a is the sampling frequency (we can leave phase out, because it will be the reference). Here’s a desmos graph. y0 is the signal, y1 is the a-b image, and you can click the left column of y2 is you want to see a+b. The frequency slider, f, is normalized frequency, so normally it is constrained between 0 and under 0.5, but I’ve set it to go as high as 1 so that you can observe aliasing into the pass band. p is phase offset of the sinusoid. One thing you can notice, if you set f to 0.5, is that the image is the same frequency as the signal, but it’s dependent on the phase of the signal. And this makes sense—if you were to sample a 24 kHz sinusoid at 48 kHz (assuming you relax the lowpass filter to allow it), you might get the full sinusoid (if the peaks are align to the sampling instances), you might get zero (if zero crossings are aligned), you might get something in between. It’s the same as the cancellation between the sinusoid and its image at the same frequency, dependent on phase. First image graph